Beginning The Quest For Serverless CQRS & Event Sourcing

The Quest

This is the first in a series of blog posts to explore the potential for building serverless event sourced CQRS systems on AWS. First we’re going to lay out the whats and the whys of CQRS and event sourcing, some of the constraints we’re under and some initial spikes that look promising.

By the end of the quest we hope to have an example repository that you can fork and extend to start your own Serverless CQRS journey of discovery.

Why should I care about CQRS & Event Sourcing?

In a general sense, there is always something to learn in any new style of application or architecture. We live in a world of trade-offs and most advancements happen from transferral between domains as opposed to brand new unique insight.

In a more direct sense, I believe CQRS and Event Sourcing are becoming an invaluable tool to have in ones arsenal. Having built a series of systems based on their principles, when presented with high complexity, high value problems it quickly became my default due to repeated success.

Why CQRS?

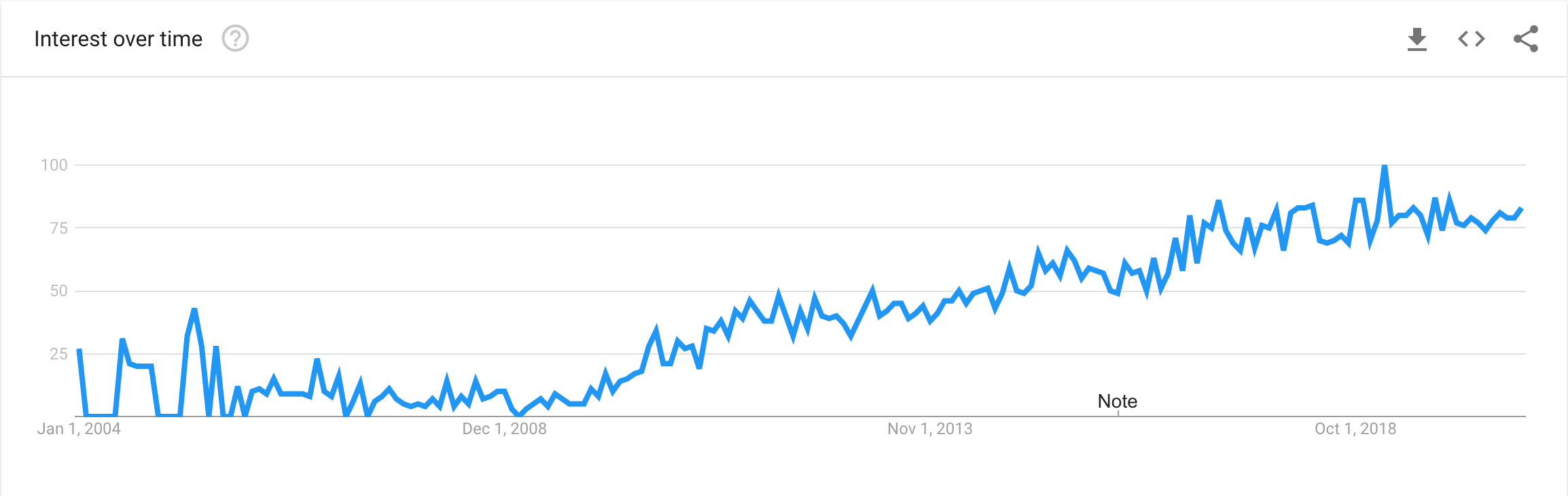

In the early 2000s, Greg Young shared Command Query Responsibility Segregation (CQRS) with the world, a pattern to build scalable, extendable domain driven systems. Over the intervening years interest has trended upwards:

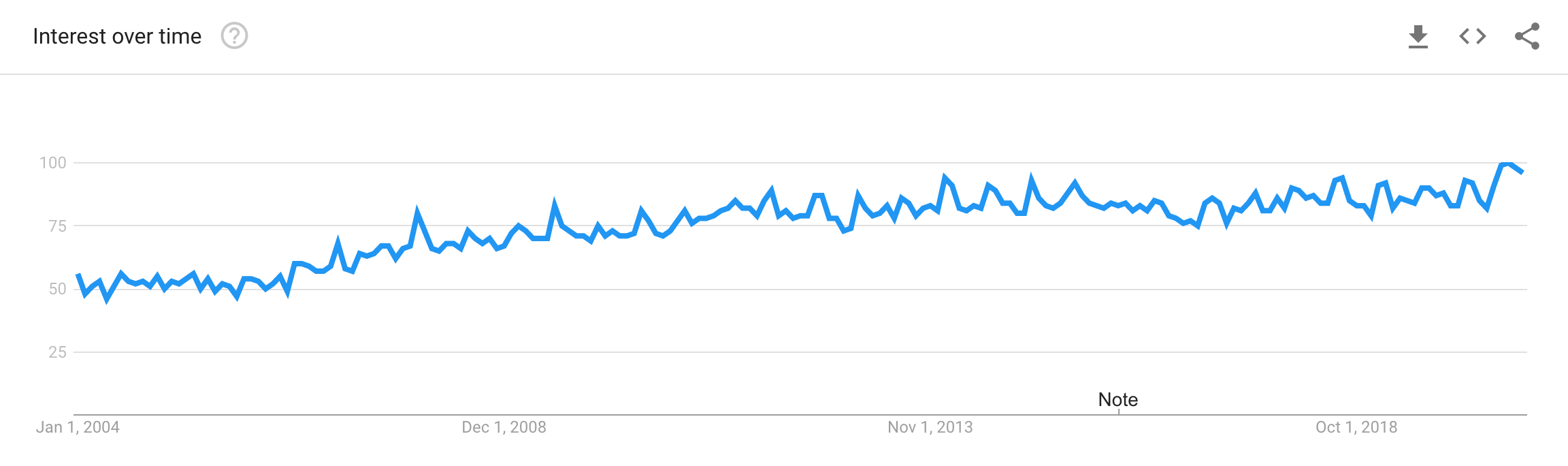

Not a disimilar trend to Domain Driven Design (DDD) as a whole:

Two keys reasons that I believe underly this trend are:

- Scalability is fundamental, businesses rightly expect more of systems, especially with the elasticity that public cloud provides

- Legacy systems are impeding progress, they get exponentially harder to work on, CQRS and Event Sourcing provide a journey to break the cycle

What is CQRS with Event Sourcing

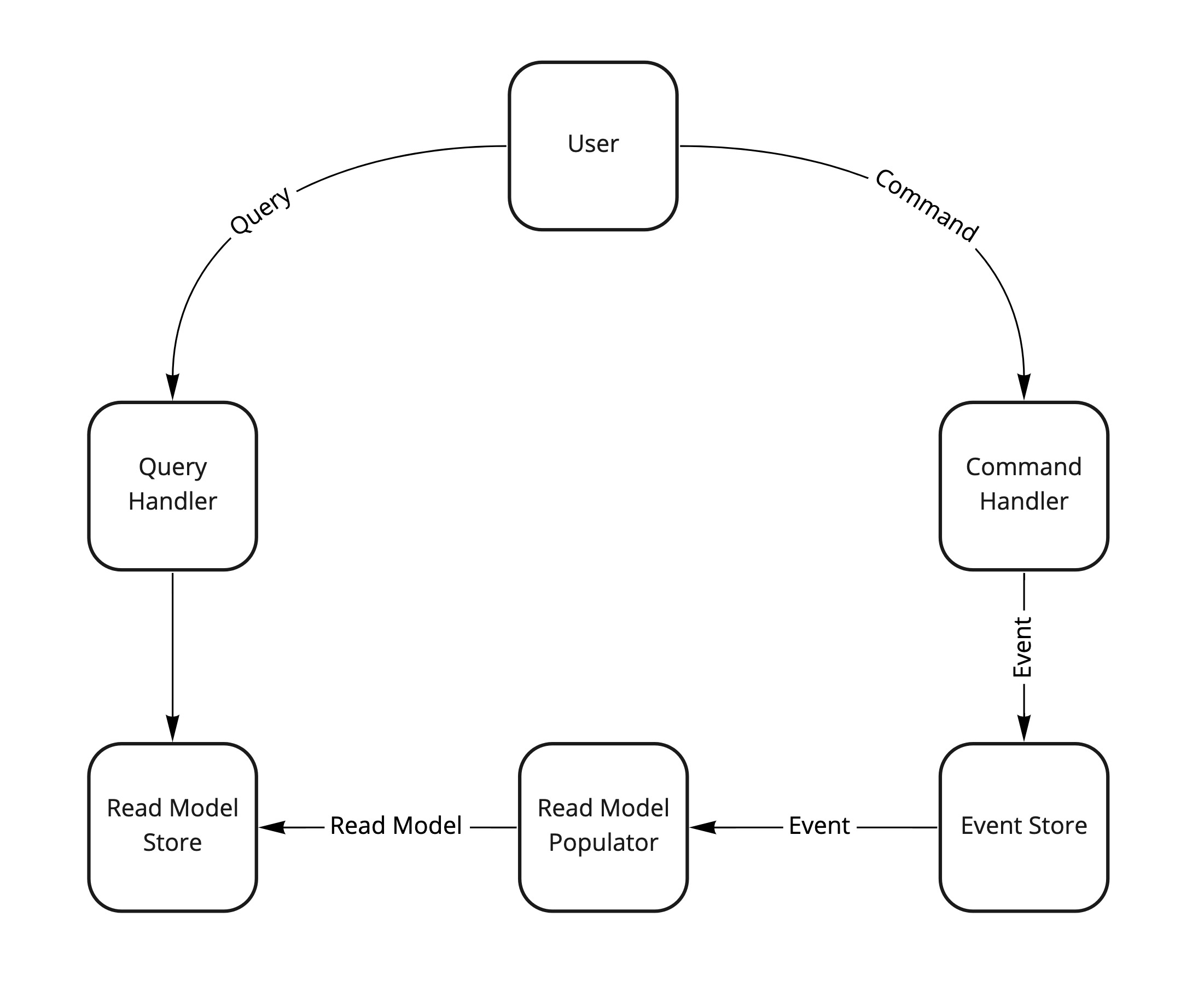

Let’s look at the data flows through the system first, using a banking app as an example:

- Commands -> Commands are statements of intent from a user, they can be rejected, and are aimed at a distinct aggregate. Think “Deposit Money”

- Aggregate -> Aggregates are an instance of a domain object, Think “Josh’s Account”

- Events -> Events are statements of fact, they are persisted forever and cannot be rejected. Think “Money Deposited”

- Queries -> Queries are asking to get data out of the system. Think “Get Account Balance”

- Read Models -> Read Models are an opinion of current state. Rather than have to compute the account balance on demand, we can keep a running tally as events enter the system and present that back

Now let’s break the individual components:

- User -> A user of the system, could be a customer, a teller or another system

- Command Handler -> Handles the commands to the system

- Event Store -> An immutable event ledger

- Read Model Populator -> A process which tails the event store and computes read models

- Read Model Store -> A fit for purpose store

- Query Handler -> Handles queries by accessing read model stores

Commands and queries are served from opposite sides, hence segregation.

Now let’s look at why this is powerful

The Power of Relaxing Consistency

Strong vs Eventual Consistency

When it comes to consistency we have two options, one is strong and one is eventual, and they give us different guarantees.

Strong consistency means that for the same question, we get the same answer, in exchange for a performance impact. E.g. AWS DynamoDB strongly consistent reads are twice as expensive and slower than eventually consistent reads.

Eventual consistency means that given enough time, we get the same answer. By embracing this we open up more architectural options.

In CQRS, queries are answered with eventual consistency, as they are “opinions”

However, commands are handled with strong consistency, as they result in “facts”

By segregting commands and queries we are able to specifically optimise, where we need stronger guarantees we have them, where we have more freedom we take full advantage.

The Event Store Core

Let’s look a bit deeper into event stores.

It is an immutable ledger of events. All data traversing through the system is persisted as events, allowing us to rebuild state on demand.

Global Chronological Event Ordering

A common event store debate, is whether chronological ordering of events is required.

The key to this discussion is in the mathematical concept of commutativity, i.e. is order important?

If replaying events in any order has the same outcome, then the events are commutative, which removes the need for order.

Within an aggregate, events are nearly always non-commutative, e.g. if I have two events for changing my address, the final state is reliant on processing order.

Across aggregates, you can:

- design for allowing non-commutative events

- design to never have them

- sacrifice replay consistency.

Number one can be achieved through global ordering Number two is possible only in highly exceptional edge cases Number three discards one of the unique properties of the pattern

Conclusion

For the intents of this quest I’m going to include global ordering for one very simple reason:

- Having global ordering is an easily reversible decision

- Not having global ordering is incredibly difficult, approaching impossible to reverse

What Constraints Does Serverless Imply?

I want to be able build the entire solution using only serverless AWS services, with no idle running cost.

For compute the obvious option is Lambda functions, event sourcing marries well with event driven, and we can front them with REST APIs to allow us to interact with the system. The more interesting choices are for our event store, in terms of serverless databases on AWS we have:

- Serverless Aurora

- DynamoDB

- Quantum Ledger Database

- Timestream

- Neptune

- Keyspaces

The first three databases are on first inspection the most interesting.

Next time we’ll be investigating using Serverless Aurora, as a full SQL database means we are on well trodden paths, before branching off into experimental options.